It’s been almost a year since I started working as a Machine Learning Engineer at an early stage startup. Its been a roller-coaster ride to say the least. And I have learnt so much in that same period. Today, I thought of reflecting on the lessons learnt and to summarise my learning in the blog post. The list is definitely not exhaustive but I have tried to cover the most important ones. Since the list was becoming long, I decided to break it down into two parts. The first part contains some of the lessons which are more specific to applying ML in business scenarios while the second one will contain some lessons which are much more general. Some of them might seem very trivial, but even then, its surprising how often we tend to overlook or take for granted the most trivial steps.

-

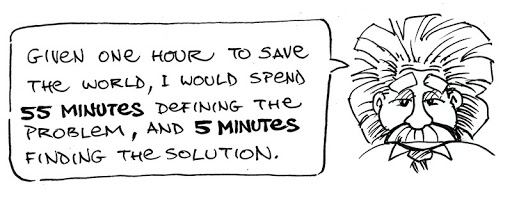

Understand the problem ( business case ): This is one of the steps which seems very obvious but at the same time, not enough attention is paid to this step. We have to remember that even if we build a State of The Art ( SOTA ) model for a particular task but for some reason it doesn’t perform well for our use-case, then that is close to useless. It is important we understand the business case which we are trying to automate or solve using Machine Learning and try to understand the unique requirements of that problem. It is also important at this step to take into account what is the expected performance from this model. While you might want to build the best model that you possibly can, you have to understand that in a business environment, there are a lot of constraints which you might have to take into account. That might include availability of resources, time and you have to build your solution within those constraints.

-

Accounting for the effort in gathering data: If you are in a Kaggle competition, the dataset generally is collected, curated and handed over to you in a proper format. But that is rarely the case when you are trying to solve something in a real business scenario. You might have to spend a lot of time gathering the data, extracting the data from different sources. On top of that, you might be performing some supervised learning task for which the data exists but the ground truth doesn’t. There you might have to use human resources from within your company or external to your company to create the ground truth. This is quite expensive both in terms of time and effort. Not only that, quite often humans might vary in their interpretation of what should be the correct ground truth for a particular datapoint. In such cases, its important that the instructions for annotations are clear and up-to-date so that there’s not much scope for ambiguity. There should also ideally be a proper channel using which the human resources creating the ground truth can seek clarifications if they are not fully confident about the ground truths for a particular datapoint. To get better output from the human resources, we can actually have techniques which will make their work easier ( see point 6 and 7).

-

Importance of Test Set: Test Set ( or more accurately, HoldOut Set ) plays a very important part in building a Machine Learning model. The Test Set is what we actually use to measure the performance of the model. If the Test Set is not a good representation of the actual distribution of the samples that we are expected to encounter in production ( although this might be quite hard to anticipate from before ), then we will not be able to get a good estimate of the true performance of our model. If we want deeper control, we might create more than 1 test set, each of them representing a different aspect of the model that we want to test.

For eg, if we are creating a Classifier to predict a text based model, we can have different test sets, one containing short length texts, another containing long length texts and so on. We might then find that the model is performing well for short length text and not performing so well for the long length texts.

-

Architecture selection: The Model Architecture that you choose for your task should be decided after taking into account the business and other constraints that you might have. For example, if you are training a model for a task which requires super low latency inference times, you should keep that in mind before you use a super large SOTA model which won’t be able to give you those inference times given the constraints that you will have when you deploy it in production.

-

Understanding the pipeline: Understand the flow of the business process end to end. It is quite likely that your model forms a part of the whole process which depends on some of the upstream tasks. So the theoretical best performance is dependent on the performance of the upstream tasks. If the upstream tasks doesn’t perform well, the downstream task will suffer too.

For eg., you might have a business process which has an OCR component followed by a ML model component. If the OCR performance is 60% and the ML model performance is 95%, still the overall performance of the pipeline will be 60% * 95% ~ 57%. In short, the poor performance of the upstream tasks are totally making the performance of your downstream task worthless. So, in this scenario spending more time on trying to better the performance of the ML model won’t help, you will have to focus on the OCR process here.

-

Making the annotation process a feedback loop: As mentioned in point no. 2, using human resources to annotate ground truth is expensive, both in terms of time and effort. So, if we can make the task easier for them and get better output from them, it is worthwhile. One of the things that we can try is to make the annotation process more of a feedback loop.

Let’s try to understand this with an example. Let’s say that for a particular task, we have to collect 1000 datapoints for training. Let’s say the annotators annotate first 200 datapoints from scratch. Then we train a model ( which might not perform very well, but perhaps will still have some decent output ) on those datapoints. Then for the next batch of samples, we don’t ask the annotators to annotate from scratch. Instead we give them the output on the rest of the samples using the model we have trained and ask them to review the output and modify what they see as incorrect. This way they are not annotating from scratch but only providing feedback for the ones that they view as wrong which will save time. Imagine for example, that with only 200 examples, we get performance of 80%. Which means that out of 100 samples, the annotator will have to modify only 20 samples which will save them a lot of time.

-

Using loss to verify annotations: Human annotators can make mistakes. While there are a lot of ways to try and minimise that which require extra manual labour and therefore is expensive ( for example, by ensuring that more than one person goes over each annotation ), an easier and cheaper way is by using loss value of the model. Note that this can only be used when we already have a decent model for the task and is annotating more to further improve the model. Here, the new set of datapoints that is annotated in the latest sprint can just be passed into the model and the loss value can be calculated for each datapoint seperately. Since the loss gives a quantifiable measure of how confused the model is for a particular datapoint, we can re-check the datapoints for which the loss is quite high ( say for example an order or two magnitude higher than the median loss ). Assuming that the model has a decent understanding of the task by this point, this can help point out wrong annotations without any extra manual effort.

-

Keeping an eye on the model: During beta testing, it is advisable to have some sort of QC process in place and a feedback loop to get an idea on how the model is performing and fine-tune the model according to those feedback. Even after we deploy a model in production, we shouldn’t just heave a sigh of relief and stop monitoring it. This is because the distribution of the test set ( or holdout set ) on which we benchmarked the model might be quite different from the distribution of the data that it is predicting in production. Ideally, we should have some sort of QC in production stage too to ensure that the inferences that the model are making in production is actually of good quality or is within performance levels that is expected from the model. If not, we should look into it, perhaps re-train it with more samples representing the cases for which it is not performing well.

The list is not exhaustive by any means. Also, it is important to remember that some things might work well for some project and not so well for some other project. Stay tuned for the 2nd part of this series where I talk about some more lessons that I have learnt.

About the author: Rwik is a Machine Learning Engineer specializing in Deep Learning, working at an early stage startup ( NLP Domain ). He is experienced in using Deep Learning to automate critical business processes in production setting and building custom ETL pipelines. Connect with him on LinkedIn, Medium or email at rwikdutta@outlook.com. You can also find more about him at https://rwikdutta.com.

Comments